Procket Networks

PRO/8801 router evaluation

Edoardo

Martelli, CERN, 10 March 2004

Abstract

Procket Networks has lent to the

Datatag project two PRO/8801

routers in order to be evaluated. This document reports some of the

tests that have been performed.

Table of Contents

Overview

BGP and OSPF

MPLS LSP: RSVP Signaling

MPLS LSP: CCC tunnel

performance

IPv6 Land Speed Record attempt

Differentiated Services

based QoS

Overview

Two Procket PRO/8801 routers, obtained on loan from Procket

Networks, were installed in the Datatag testbed. Several tests were

performed with them, in order to evaluate their reliability

(hardware and software), and the interoperability with other vendors

routers.

The PRO/8801 had a very solid and well engineered hardware: the cards

were

easily inserted and removed, all the connectors were in the front side

and easily accessible. All the interfaces tested had LC connectors.

The software resulted to be very stable, even the beta releases; the

interoperability

with Cisco IOS and Juniper Junos was very good (no problem were

noticed). It only lacked of some features (IPv6 routing protocols,

policy based routing, just to mention what we would have wished to

try). The Command Line Interface was Cisco like, and so easy to use by

whom was used to configuring Cisco routers.

The documention provided was complete, with many configuration examples

that helped the first time users.

The support from the Technical Staff was effective and responsive: all

the questions were quickly answered, and the problems solved.

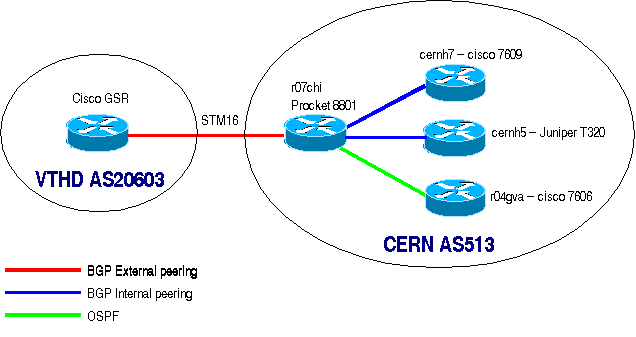

BGP and OSPF

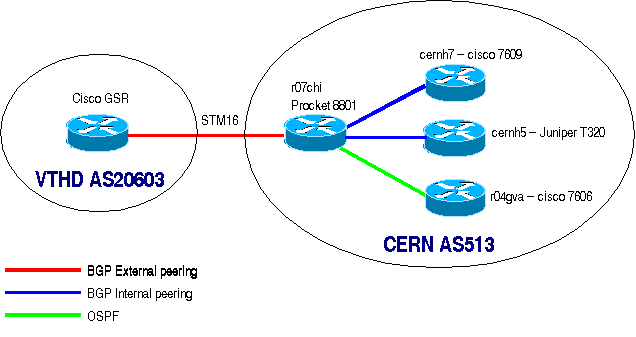

The Procket PRO/8801 in Geneva was added to the CERN production

network

in order to connect the VTHD

Autonomous System to the CERN network.

The PRO/8801 was configured almost as any other production

routers, with Radius authentication and running BGP and OSPF.

VTHD had a STM16 line from Lyon (FR) to Geneva (CH), they announced

four

prefixes via an external BGP peering between their Cisco GSR and the

PRO/8801. The PRO/8801 was connected with a 1Gbps ethernet link to the

CERN

external network switch (Cisco 6506) and with a 1Gbps ethernet

links to a Datatag router (r04gva, Cisco 7606). The routing protocols

within AS513 were in a hybrid configuration, since the PRO/8801

belonged

to the

Datatag test-bed and also to the CERN production network. It spoke

OSPF

with

the Datatag routers (they run a separated OSPF process), while it had

iBGP peerings with two production routers: cernh5 (Juniper T320) and

cernh7 (Cisco7609).

This configuration was not straightforward, as far as routing protocols

are concerned. First of

all, a filter was applied in input on the eBGP peering with VTHD

in order to accept only the four prefixes that were agreed. From iBGP,

it received the full Internet routing table (more

than 120000 prefixes) from cernh7, except the prefixes coming from

Geant that were received from cernh5. The full routing table was

forwarded to the VTHD peer. The four prefixes received from VTHD were

redistributed into the Datatag test-bed via OSPF.

Comments

The PRO/8801 turned out to be very stable and reliable, even with a

beta release of the operating system. It has been running in this

configuration for more than two months without any problem.

The configuration of the routing policies was easy to understand and

implement, and worked perfectly.

The command line interface was Cisco like and it didn't require any

training. Software upgrades and downgrades were easy to implement, and

they worked flawlessy.

Configuration

router-name r07gva

!

ip service telnet

ip service ftp

!

ip domain-list cern.ch

ip domain-list datatag.org

!

ip name-server 192.65.185.10

ip name-server 192.91.244.10

!

aaa new-model

aaa authentication login default

radius local

aaa authentication enable default

radius local

aaa authorization exec default

radius local

aaa authorization commands 0

default radius local

aaa accounting commands 0 default

start-stop radius

service password-encryption

ip radius source-interface

Loopback0

radius-server host 192.91.244.20

key xxxxxxxxxx

!

interface Loopback0

ip address 192.91.239.249/32

ip router ospf 1297

ip ospf area 0

!

interface MgmtEther0

ip address 192.91.244.50/27

!

!

interface POS0/2/0

description ----> VTHD

Lyon

clock source internal

pos framing sonet

mtu 9188

ip address

193.252.226.186/30

encapsulation ppp

!

interface GigEther0/3/0

description ----> cernh7

ip address 192.65.184.73/30

!

interface GigEther0/3/1

description ----> trunk

to r04gva

!

interface GigEther0/3/1.22

description ----> p2p to r04gva

encapsulation vlan 22

ip address 192.91.239.201/30

ip router ospf 1297

ip ospf area 0

!

interface GigEther0/3/2

description ---->

sw6506-cixp

ip address 192.65.192.26/26

!

router ospf 1297

redistribute bgp 513 policy

vthd-in

router-id 192.91.239.249

!

router bgp 513

neighbor 192.65.184.74

remote-as 513

description

----> cernh7

address-family

ipv4 unicast

send-community

policy cernh7-in in

next-hop-self

neighbor 192.65.192.5

remote-as 513

description

----> cernh5

address-family

ipv4 unicast

send-community

next-hop-self

soft-reconfiguration inbound

neighbor 193.252.226.185

remote-as 20603

description

"----> AS20603_VTHD"

address-family

ipv4 unicast

send-community

policy vthd-in in

!

!

policy test-list GEANT-ROUTES

match-any

match bgp as-path

"^.*_20965_.*$"

!

policy test-list VTHD-ROUTES

match-any

match ip prefix

193.252.113.0/24

match ip prefix

193.253.175.0/24

match ip prefix

193.252.226.0/24

match ip prefix

193.48.21.64/26

!

policy action-list set-bgp-weight

set bgp weight 140

!

policy routing-rules cernh7-in

statement 10

if

GEANT-ROUTES then return-reject endif

statement 20

do

set-bgp-weight return-accept

!

policy routing-rules vthd-in

statement 10

if VTHD-ROUTES

then return-accept endif

statement 65535

return-reject

!

line vty 0

exec-timeout 60

!

ntp server 192.91.244.10

!

route-context-config management

ip route 0.0.0.0/0 192.91.244.33

O.S. version

r07gva# sh version

PRO/1-MSE Procket Modular Service

Environment

System

Uptime: 57

day(s), 20:07:24

Protocol

Uptime: 48 day(s),

23:40:53

System Release

Version: 2.3.0.180-B

Build

Information: Wed Oct 15 13:43:53

PDT 2003 (sw-build)

Kernel

Version:

2.3.0.1-P PowerPC

Total Physical

Memory: 112 MB kernel, 1919 MB user

BGP neighbors

r07gva# sh bgp summary

BGP router identifier

192.91.239.249, local AS number 513

BGP table version is 3231239,

IPv4 Unicast config peers 3, capable peers 2

129682 network entries and 129682

paths using 12968200 bytes of memory

BGP attribute entries

[24318/1556352], BGP AS path entries [21715/214132]

BGP community entries [195/3644],

BGP clusterlist entries [10/60]

2899 received paths for inbound

soft reconfiguration

2899 identical, 0 modified, 0

filtered received paths using 0 bytes

Neighbor

V AS MsgRcvd MsgSent TblVer InQ

OutQ Up/Down State/PfxRcd

192.65.184.74

4 513 952174 22965

3231239 0 0

2w1d 126778

192.65.192.5

4 513 89119 34372

3231239 0 0 1d20h

2899

193.252.226.185 4

20603 68904 910778 3231239

0 0 5d14h

4

Radius attributes

The Radius server had to be configured to return the following

attributes in order to allow the PRO/8801 to give enable privileges to

the authenticated users:

DEFAULT NAS-IP-Address ==

192.91.239.249

PROCKET-PRIV-LVL = "15",

PROCKET-ALLOW-SHELL = "1",

PROCKET-CMD-RULES = "permit .*",

Cisco-AVPair = "shell:priv-lvl=15",

Cisco-AVPair = "shell:allow-shell*true",

Service-Type = Login-User,

Fall-Through = Yes

back

to the top

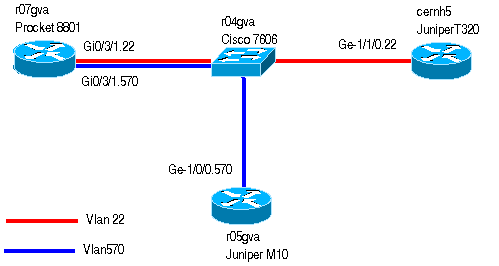

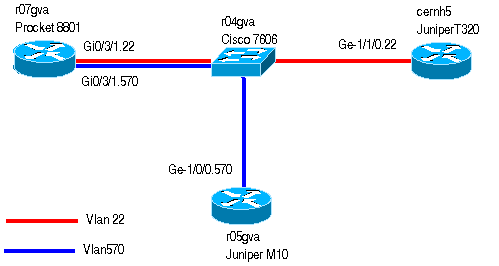

MPLS LSP: RSVP signaling

This test aimed at verifing the implementation of the RSVP protocol,

the

Traffic Engineering extension of the IGPs, and its compatibility

with other vendor implementations.

The picture above describe the test-bed. The goal was to have the

PRO/8801between the two Junipers, to test how the 8801 reacted to the

bandwidth requirment of LSPs defined on the Juniper.

ISIS with Traffic Engineering extension was configured among the

three routers. Unfortunately, since the cernh5 was a production router

and it already run OSPF in production, it wasn't possible to test also

the

OSPF TE extensions.

In the first test, a LSP was defined from r05gva to cernh5 through

r07gva. The interface Gi0/3/1.22 of r07gva was configured to have a

maximum of 100Mbps available for RSVP reservations. Changing the

bandwidth requirment for the LSP defined on r05gva, the state of the

LSP was

always as expected, i.e. up for requests below 100Mbps, and down for

requests above.

In the second test, a LSP test was defined from r07gva to r05gva, and

one in the opposite direction. Everything worked as expected.

Comments

Unfortunately it wasn't possible to test more complicated

configurations due to the lack of MPLS TE enabled routers in the

test-bed. Anyway, the behaviour of the PRO/8801 was always as expected:

no problem nor incompatibility were noticed.

Configurations

r05gva.datatag.org

interfaces {

ge-1/0/0

{

description "----> r04gva.cern - Cisco 7606";

enable;

vlan-tagging;

mtu 9192;

unit 570 {

description "----> CCC tunnel to CNAF";

vlan-id 570;

family inet {

mtu 9174;

address 192.91.239.60/26;

}

family iso;

family mpls;

}

}

lo0

{

unit 0 {

family inet {

filter {

input local-access-control;

}

address 127.0.0.1/32;

address 192.91.239.253/32;

}

family iso {

address 49.0001.0000.0000.0105.00;

}

}

}

}

protocols

{

rsvp

{

interface all;

}

mpls

{

explicit-null;

label-switched-path r05-r07-h5 {

from 192.91.239.60;

to 192.91.238.254;

bandwidth 95m;

}

interface all;

}

isis

{

traffic-engineering enable;

interface ge-1/0/0.570 {

level 1 disable;

}

interface lo0.0 {

level 1 disable;

}

}

}

r07gva.datatag.org

router-name r07gva

!

interface Loopback0

ip address 192.91.239.249/32

ip router isis 490001

isis circuit-type level-2

!

interface Tunnel10

tunnel mode mpls te

tunnel destination

192.91.239.60

tunnel mpls te primary-lsp

r07-r05

tunnel mpls te igp-shortcuts

!

interface GigEther0/3/1

description ----> trunk

to r04gva

!

interface GigEther0/3/1.22

mpls te tunnels

mpls te bandwidth 100000

description ----> p2p to

r04gva

encapsulation vlan 22

ip address 192.91.239.201/30

ip address

192.91.238.253/30 secondary

ip router isis 490001

isis circuit-type level-2

!

interface GigEther0/3/1.570

mpls te tunnels

mpls te bandwidth 500000

description ---->

datatag vlan

encapsulation vlan 570

ip address 192.91.239.43/26

ip router isis 490001

isis circuit-type level-2

!

router isis 490001

net

49.0001.0000.0000.0107.00

mpls te level-1-2

!

mpls te explicit-path r07-r05

index 1 next-address

192.91.239.60

cernh5.cern.ch

interfaces {

ge-1/1/0 {

description "----> trunk to r04gva";

vlan-tagging;

mtu 9192;

encapsulation vlan-ccc;

gigether-options {

flow-control;

}

unit 22 {

description "----> r07gva - test MPLS edoardo";

vlan-id 22;

family inet {

address 192.91.238.254/30;

}

family iso;

family mpls;

}

}

lo0 {

unit 0 {

family inet {

filter {

input local-access-control;

}

address 127.0.0.1/32;

address 192.65.184.5/32;

}

family iso {

address 49.0001.0000.0000.0005.00;

}

}

}

}

protocols {

rsvp {

interface all;

}

mpls {

explicit-null;

label-switched-path cern_starlight {

from 192.65.184.54;

to 192.65.184.53;

no-cspf;

}

interface all;

}

isis {

traffic-engineering enable;

interface ge-1/1/0.22 {

level 1 disable;

}

interface lo0.0 {

level 1 disable;

}

}

}

LSP r05-r07-h5

requested 95Mbps, available on the PRO/8801 100Mbps

emartell@r05gva>

show rsvp interface

RSVP interface: 6 active

Active Subscr- Static

Available Reserved Highwater

Interface State

resv iption

BW

BW

BW mark

ge-1/0/0.570Up

2 100% 1000Mbps

905Mbps 95Mbps

100Mbps

emartell@r05gva>

show mpls lsp

Ingress LSP: 4 sessions

To

From

State Rt ActivePath

P LSPname

192.91.238.254

192.91.239.60 Up

0

* r05-r07-h5

emartell@r05gva>

show route protocol rsvp

inet.3: 2 destinations, 2 routes

(2 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last

Active, * = Both

192.91.238.254/32 *[RSVP/7]

00:29:10, metric 65535

> to 192.91.239.43 via ge-1/0/0.570, label-switched-path r05-r07-h5

r07gva#

show mpls te link-management interface

Link ID:: GigEther0/3/1.22

(192.91.239.201)

Link Status:

Physical Bandwidth: 1000000 kbits/sec

Max Reservable BW: 100000 kbits/sec

BW

Descriptors: 1 (Groups 1)

MPLS TE Link State: MPLS TE on, RSVP on, link-up

Outbound Admission: allow-if-room

Admin weight: 0 (IGP)

Unused Lock BW: 5000 kbits/sec

Local Protection Desired : Yes

r07gva#

show ip rsvp session

Dest: 192.91.238.254, Tunnel ID: 683,

Extended tunnel ID: 192.91.239.60

Sender: 192.91.239.60, LSP

ID: 12, LSP state: Up, 00:03:06

LSP name:

r05-r07-h5

Resv style:

FF, Label in: 100000, Label out: 0

Tspec: rate: 95.000 Mbps, size:

11.325 MB

peak: Inf Mbps, m: 20, M: 1500

Path received

from: 192.91.239.60 (GigE0/3/1.570)

Path sent to:

192.91.238.254 (GigE0/3/1.22)

Resv received

from: 192.91.238.254 (GigE0/3/1.22)

Explicit

route: 192.91.239.43 192.91.238.254

Recorded

route: 192.91.239.60 (0x0)

LSP r05-r07-h5

requested 105Mbps, available on the PRO/8801 100Mbps

emartell@r05gva> show mpls lsp extensive

[...]

192.91.238.254

From: 192.91.239.60,

State: Dn, ActiveRoute: 0, LSPname: r05-r07-h5

ActivePath: (none)

LoadBalance: Random

Encoding type: Packet,

Switching type: Packet, GPID: IPv4

Primary

State: Dn

Bandwidth: 105Mbps

Will be

enqueued for recomputation in 22 second(s).

119 Dec 15

17:29:49 CSPF failed: no route

toward 192.91.238.254[11 times]

118 Dec 15

17:24:52 Deselected as active

117 Dec 15

17:24:52 CSPF failed: no route toward 192.91.238.254

116 Dec 15

17:24:52 Clear Call

[...]

LSPs r05-r07 and r07-r-5

emartell@r05gva>

show mpls lsp

Ingress LSP: 5 sessions

To

From

State Rt ActivePath

P LSPname

192.91.238.254

192.91.239.60 Up

0

* r05-r07-h5

192.91.239.43

192.91.239.60 Up

0

* r05-r07

Egress LSP: 3 sessions

To

From

State Rt Style Labelin Labelout LSPname

192.91.239.60

192.91.239.249 Up 0 1

SE

0 - r07-r05

r07gva#

sh ip rsvp session

Dest: 192.91.239.43, Tunnel ID:

877, Extended tunnel ID: 192.91.239.60

Sender: 192.91.239.60, LSP

ID: 1, LSP state: Up, 00:51:20

LSP name:

r05-r07

Resv style:

FF, Label in: 3, Label out: -

Tspec: rate:

0.000 Mbps, size: 0.000 MB

peak: Inf Mbps, m: 20, M: 1500

Path received

from: 192.91.239.60 (GigE0/3/1.570)

Path sent to:

localclient

Resv received

from: localclient

Explicit

route: 192.91.239.43

Recorded

route: 192.91.239.60 (0x0)

Dest: 192.91.239.60, Tunnel ID:

23, Extended tunnel ID: 192.91.239.249

Sender: 192.91.239.249,

LSP ID: 1, LSP state: Up, 00:39:13

LSP name:

r07-r05

Resv style:

SE, Label in: -, Label out: 0

Tspec: rate:

0.000 Mbps, size: 0.001 MB

peak: 0.000 Mbps, m: 64, M: 65535

Path received

from: localclient

Path sent to:

192.91.239.60 (GigE0/3/1.570)

Resv received

from: 192.91.239.60 (GigE0/3/1.570)

Explicit

route: 192.91.239.43 192.91.239.60

Recorded

route: <None>

r07gva# sh mpls te tunnels

Tunnel name: Tunnel10, Tunnel

IOD: 23, Dest: 192.91.239.60 (TE node present)

Admin status: UP, Operational

status: UP

NHLFE installed: NH addr:

192.91.239.60, NH IOD: 22, Out label: 0

Tunnel metric: 0 (default

relative), IGP shortcuts configured

LSP name: r07-r05 (active), LSP

type: Primary, LSP Tunnel: Tunnel10

LSP STATE: UP, LSP ID: 1, LSP

Handle: [0xdc5b2238|0]

LSP Constraints:

Bandwidth : 0

(Setup Priority: 7, Hold Priority: 0)

Affinity

: 0x0, Affinity Mask: 0x0

Hop Limit : 255

Fill style:

Random-fill

LSP status flags:

Shutdown : No Run CSPF : Yes Record

Route : No

Logging : No Deleted :

No Cold Sec Skip : No

RSVP

TxLst : No ERO Sent : Yes CSPF Pending :

No

Facility

Backup Desired : No In

Use : No

LSP Timers:

Retry Enabled,

Reoptimize Disabled

Retry:

00:00:00, Reoptimize: 00:00:00, Signalling: 00:00:00

Last UP

transition: 00:40:14, Last DOWN Transition: never

Next-Hop Label Forwarding Info:

Next Hop:

192.91.239.60, Outgoing IOD: 22, Label: 0

ULIB Install

status: Yes

CSPF Computation status:

Last CSPF Run:

00:40:14, Succeeded

Last Failure

reason: No CSPF Error

Last RSVP error information:

Last error

notification: never, error count 0

RSVP error

code: 0, value: 0, node: 0.0.0.0

LSP Path Information:

Explicit Path

Configured: None

Cost to

destination: 40, Hops: 1

Computed

Explicit Path (Hop Count: 1): 192.91.239.60

back

to the top

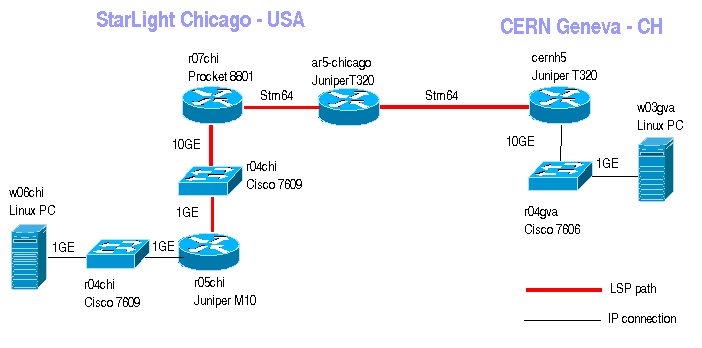

MPLS LSP: CCC tunnel

performance

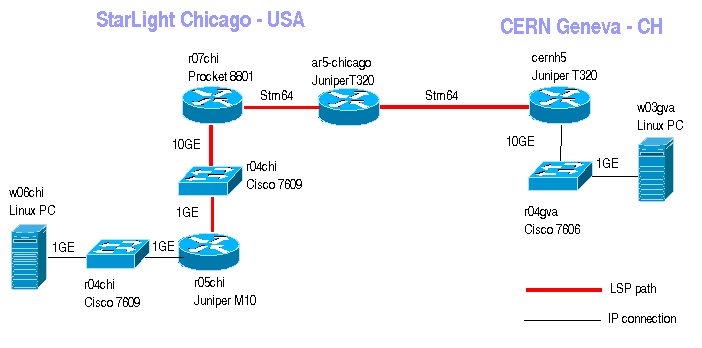

The CERN and Datatag networks are using CCC tunnels for production and

test traffic. CCC is a Juniper technology that builds L2 connections

between pairs of interfaces on different routers, using LSPs. The aim

of this test was to

verify the interoperability of the MPLS TE features implemented in the

Procket Networks routers.

The end points of a CCC

tunnel must be Juniper routers since CCC is a proprietary technology,

but the endpoints are connected with MPLS LSP, that is a standard. In

this test, a PRO/8801 router was connected along the LSP path to

verify the interoperabilities of its MPLS LSP and RSVP implementation

(RSVP is used for MPLS labels exchange among routers).

The End Points of the CCC tunnel were two Gigabit Ethernet

subinterfaces on a Juniper T320 (cernh5.cern.ch) and a Juniper

M10 (r05chi.datatag.org). The testbed had to be extended from Chicago

to Geneva because it was necessary to use the same vlan-id in the

tunnel endpoint subinterfaces. In this drawing, the Cisco 7609 r04chi

is reported

twice in Chicago because it was used to implement two different and

independent VLANs (not routed).

Comments

The RSVP protocol and the MPLS implementations of the PRO/8801

perfectly interacted with the Juniper ones. The test was successful:

RSVP correctly established neighbor adjacencies and exchanged labels

along the path. The CCC connection, first tested without the PRO/8801

along the path, correctly came up as soon as the correct routing

was established in order to include it.

The performance were also good: it was possible to run an UDP

stream of 940Mbps between two PCs through the CCC tunnel (same

throughput achieved along a traditional L3 path without MPLS).

The configuration of the Procket 8801 was in fact very simple: it just

needed to activate the MPLS TE tunnel capabilities on the interfaces

connected to the Juniper routers.

Configurations

Cisco 7606 - r04gva

Juniper T320 - cernh5

Juniper T320 -

ar5-chicago

Procket 8801 - r07chi

Cisco 7609 - r04chi

Juniper M10 - r05chi

Cisco 7606 r04gva

configuration

interface TenGigabitEthernet2/1

description ----> Trunk

to cernh5 - T320

mtu 9216

no ip address

load-interval 30

flowcontrol send on

switchport

switchport trunk native

vlan 666

switchport trunk allowed

vlan 1,2,20,570,595,1002-1005

switchport trunk pruning

vlan 3-19,21-569,571-594,596-1001

switchport mode trunk

no cdp enable

interface GigabitEthernet4/3

description ----> w03gva

mtu 9216

no ip address

load-interval 30

mls qos trust dscp

switchport

switchport access vlan 595

switchport mode access

no cdp enable

Juniper T320 cernh5

configuration

interfaces {

so-1/0/0 {

description "----> STM64 Starlight - 010490-041030 044/3003

T-Systems";

mtu 9192;

encapsulation cisco-hdlc;

sonet-options {

fcs 32;

}

unit 0 {

family inet {

mtu 9188;

address 192.65.184.54/30;

}

family iso;

family inet6 {

address 2001:1458:E000:0001::2/126;

}

family mpls {

mtu 9188;

}

}

}

ge-1/1/0 {

description "----> trunk to r04gva";

vlan-tagging;

mtu 9192;

encapsulation vlan-ccc;

unit 595 {

description "----> test ccc procket";

encapsulation vlan-ccc;

vlan-id 595;

family ccc;

}

}

}

routing-options {

static {

route 192.91.237.36/30 {

next-hop 192.65.184.53;

no-readvertise;

}

}

}

protocols {

rsvp {

interface all;

}

mpls {

explicit-null;

label-switched-path cernh5-r05chi {

from 192.65.184.54;

to 192.91.237.38;

no-cspf;

}

interface all;

}

connections {

remote-interface-switch CCC-THROUGH-PROCKET {

interface ge-1/1/0.595;

transmit-lsp cernh5-r05chi;

receive-lsp r05chi-cernh5;

}

}

}

Juniper T320

ar5-chicago configuration

interfaces {

so-0/0/0 {

description "----> Connected to PROCKET";

mtu 9192;

encapsulation cisco-hdlc;

sonet-options {

fcs 32;

}

unit 0 {

family inet {

address 192.91.237.33/30;

}

family mpls;

}

}

so-1/0/0 {

description "----> STM64 CERN - 010490-041030 044/3003 T-Systems";

mtu 9192;

encapsulation cisco-hdlc;

sonet-options {

fcs 32;

}

unit 0 {

family inet {

mtu 9188;

address 192.65.184.53/30;

}

family iso;

family inet6 {

address 2001:1458:E000:0001::1/126;

}

family mpls {

mtu 9188;

}

}

}

}

routing-options {

static {

route 192.91.237.36/30 {

next-hop 192.91.237.34;

no-readvertise;

}

}

}

protocols {

rsvp {

interface all;

}

mpls {

interface all;

}

}

Procket 8801 r07chi

configuration

interface 10GigEther0/0/0

mpls te tunnels

description ----> r04chi

mtu 9178

flow-control both

ip address 192.91.237.37/30

!

interface 10GigEther0/1/0

!

interface POS0/2/0

mpls te tunnels

description ---->

ar5-chicago

pos framing sonet

mtu 9188

encapsulation hdlc

ip route 192.65.184.52/30

192.91.237.33

Cisco 7609 r04chi

configuration

interface GigabitEthernet3/9

description ----> r05chi

- trunk

mtu 9216

no ip address

logging event link-status

load-interval 30

switchport

switchport trunk

encapsulation dot1q

switchport trunk allowed

vlan 4,22,570

switchport mode trunk

no cdp enable

end

interface TenGigabitEthernet1/2

description ----> procket

no ip address

switchport

switchport access vlan 22

switchport mode access

no cdp enable

end

interface GigabitEthernet3/9

description ----> r05chi

- trunk

mtu 9216

no ip address

logging event link-status

load-interval 30

switchport

switchport trunk

encapsulation dot1q

switchport trunk allowed

vlan 4,22,570

switchport mode trunk

no cdp enable

end

interface GigabitEthernet3/10

description ----> w06chi

- eth0 - TEST

mtu 9216

no ip address

load-interval 30

switchport

switchport access vlan 595

switchport mode access

no cdp enable

end

Juniper M10 r05chi

configuration

interfaces

{

ge-0/1/0 {

description "----> r04chi gi3/9 - TRUNK";

enable;

vlan-tagging;

mtu 9192;

unit 22 {

vlan-id 22;

family inet {

address 192.91.237.38/30;

}

family mpls {

mtu 9000;

}

}

}

ge-0/3/0 {

description "----> r04chi gi3/6 - Testing";

vlan-tagging;

mtu 9192;

encapsulation vlan-ccc;

unit 595 {

encapsulation vlan-ccc;

vlan-id 595;

family ccc;

}

}

}

routing-options {

static {

route 192.65.184.52/30 {

next-hop 192.91.237.37;

no-readvertise;

}

}

}

protocols

{

rsvp

{

interface all;

interface ge-0/1/0.22;

}

mpls

{

label-switched-path r05chi-cernh5 {

from 192.91.237.38;

to 192.65.184.54;

no-cspf;

}

interface all;

}

connections {

remote-interface-switch CCC-THROUGH-PROCKET {

interface ge-0/3/0.595;

transmit-lsp r05chi-cernh5;

receive-lsp cernh5-r05chi;

}

}

}

back

to the top

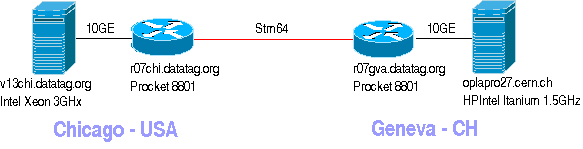

IPv6 Land Speed Record

attempt

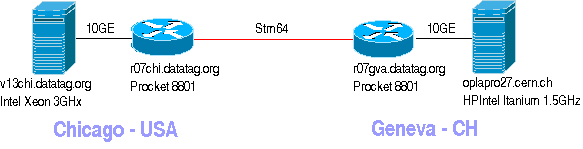

On October 31st, 2003, an international team of scientists

from CERN and

CALTECH tried to set a new Internet2

Land

Speed Records using IPv6, the next generation Internet Protocol.

The record attempt

The record attempt described in this document consists in having

achieved a

throughput of 3.867

gigabits-per-second with a

single

TCP stream over IPv6 for three hours across a distance of

7,067

kilometers from CERN (Geneva,

Switzerland) to the CALTECH/CERN pop at

Starlight (Chicago, Illinois, US).

To achieve this record, a special testbed was set up using the

resources of the Datatag project. Here the outline:

Test Results

The following table gives a summary of the results

of end-to-end data transfers between Oplapro27.cern.ch, the Intel

Itanium server at CERN

(Geneva, Switzerland), and v13chi.datatag.org, the Intel Xeon server at

StarLight

(Chicago, Illinois/US). The data was transferred from memory to memory

using a single TCP stream. The

program used to generate the stream and

to measure the performance was Iperf version 1.7.0

MTU

(bytes)

|

Duration

(sec)

|

Data

Transfered

(GigaBytes)

|

Throughput

(Gbits/sec)

|

iperf

switches

and output

|

tcpdump

output

|

8152

|

10900

|

5264

|

3.867

|

see appendix |

not available

|

8152

|

60

|

27.1

|

3.762*

|

see appendix |

- -x -c15 output

|

*Note: Because the transfer took 100% of the CPU on both

the

sender and receiver, we could not run a tcpdump during the transfer

without affecting the

performance significantly. However, we ran a tcpdump into a memory file

(/dev/shm) on the

sender, for a short period of time (1 minutes, due to file size

issues).

The competition awards the highest

throughput over the longest

distance, so the value submitted had to be calculated in this way:

Throughput * Distance = 3867 Mb/s * 7067

Km = 27328

Tbm/s (Tera bits meters per second)

Note: the distance between Geneva and Chicago was measured according to

Zenith

Aircraft Company's Virtual

GPS

Equipment involved

- At CERN: HP RX2600 workstation

- Dual Itanium2 1.5 Ghz CPU

- 4GB RAM

- Intel

PRO/10GbE LR network adapter

- Linux kernel 2.6.0-test5

- At Chicago: PC workstation

- Dual Xeon 3.06 Ghz CPU

- Supermicro X5DPE motherboard (E7501 chipset)

- 2 GB RAM

- Intel PRO/10GbE LR network adapter

- Linux kernel 2.4.22

- Procket 8801 routers - o.s. PRO/1 version 2.2.0.139-P and

2.3.0.180-B

The two workstations were responsible to generate and receive the

stream.

The Procket PRO/8801 routers

provided the necessary IPv6 routing

capabilities, in order to have three hops between source and

destination. Procket routers are able transfer IPv6

traffic at line speed.

Below there is a more

detailed description

and explanation of the

configuration parameters of the equipment,

the

operating system and interfaces of the workstations as well as the

Procket

routers configurations.

Comments

Unfortunately the record was not omologated because the data in

the TCP packets was always the same, and this broke one of the rules of

the contest.

Regarding the behaviour of the PRO/8801 routers, it was very good and

stable. The attempts could run for more than three hours without any

packet loss and with constant rate. It's important to notice that the

limit was due to the end hosts performance and not limited by the

network.

IPv6 was not yet supported by the PRO8801 routing protocols, and IPv6

routing

relied only on static routes.

A bug was noticed: the IPv6 router advertise messages announced the

wrong MTU to the hosts connected (they reported the default value and

not the configured one). This was reported to the Procket

support and they will correct it.

The Judging Decision

Date: Wed, 19 Nov 2003 19:19:44 -0500

To: Edoardo Martelli <edoardo.martelli@cern.ch>, lsr@internet2.edu

From: Richard Carlson <rcarlson501@comcast.net>

Subject: New IPv6 Single TCP stream LSR submission - Judging Decision

Cc: Olivier Martin <olivier.martin@cern.ch>,

Daniel Davids <daniel.davids@cern.ch>

Dear Edoardo;

It is with deep regret that I write to inform you that the Interent2 Land

Speed Record judging committee can not approve this I2-LSR IPv6 record

submission.

A review of the data you supplied shows that each TCP data packet contains

identical data. This means that your submission does not meet the

requirements of rule 6 "...Received data must vary in content from buffer

to buffer and...". The problem is that the TCP MSS used for this record

exactly matches the Iperf buffer size (8092 KB), thus each TCP packet

contains the identical data.

The I2-LSR judging committee encourages you to correct this problem and to

re-submit a new IPv6 record claim.

Best Regards;

Rich Carlson I2-LSR Judging committee chair

Background

Responsiveness to packet losses is proportional to the square of the

RTT (Round Trip Time): R=C*(RTT**2)/2*MSS (where C is the link

capacity and MSS is the max segment size). This makes very difficult to

take advantage of full capacity over long-distance WAN: this is not a

real problem for standard traffic on a shared link, but a serious

penalty for long distance transfers of large amount of data.

The TCP stack was designed a long time ago and for much slower

networks: from the above formula, if C is very small, the

responsiveness is kept low enough for any terrestrial RTT: modern, fast

WAN

links are "bad" for TCP performance, since TCP tries to increase its

window size until something breaks (packet loss, congestion); then

restarts from a half of the previous value until it breaks again. This

gradual approximation process takes very long over long distance and

degrades performance.

Knowing a priori the available bandwidth, TCP is prevented from trying

larger windows by restricting the amount of buffers it may use: without

buffers, it won't try to use larger windows and packet losses due to

congestion of the link can be avoided.

The product C*RTT yields the optimal TCP window size for a link of

capacity C, so it's sufficient to allocate just enough buffers to let

TCP reach the maximum performance from the existing bandwidth, without

attempting to go beyond.

- A data transfer must run for a minimum of 10 continuous minutes

over a minimum terrestrial distance of 100 kilometers with a minimum of

two router hops in each direction between the source node and the

destination node across one of more operational and production-oriented

high-performance research and educations networks. Examples of

such networks are Abilene, ESnet, CA*net3, NREN and GEANT.

- All data must be transferred end-to-end between a single pair of

IP addresses by bona fide TCP/IP protocol code implementations of RFC

793 and RFC 791. One IP address is designated the source IP

address, while the other is designated the destination address.

All data must be transferred in TCP packets encapsulated in IP packets

that use no other IP addresses other than the designated source IP

address and the designated destination IP address. The source

node is defined as the equipment associated with the source IP address

and which executes the data-source network application, while the

destination node is defined as the equipment associated with the

destination IP address and which executes the data-sink network

application.

- Instances of all hardware units and software modules used to

transfer contest data on the source node, the destination node, the

links, and the routers must be offered for commercial sale or as open

source software to all U.S. members of the Internet2 community by their

respective vendors or developers prior to or immediately after winning

the contest.

- Each entry must include sufficient information that adherence to

all contest rules can be readily determined. Entries must also

identify institution(s) and personnel requesting consideration as

winners. Entries must be submitted no later than two weeks prior

to each Member Meeting in order to receive recognition during the

meeting.

- Winners will be recognised in each of the following separate

classes:

- The IPv4 Single-Stream Class winner must utilise a single

TCP/IPv4 session.

- The IPv4 Multiple-Stream Class winner may utilise multiple

concurrent TCP/IPv4 sessions.

- The IPv6 Single-Stream Class winner must utilise a single

TCP/IPv6 session.

- The IPv6 Multiple-Stream Class winner may utilise multiple

concurrent TCP/IPv6 sessions.

Any record setter in a single stream class will be automatically be

considered for the multiple stream record in the same category.

- In computing the amount of data transferred, only data

transferred from user-process-space buffer(s) in the data-source

network application to user-process-space buffer(s) in the data-sink

network application may be counted. Received data must vary in

content from buffer to buffer and must be verified by checksum or other

means that it is identical to the data transmitted from the source

node. The elapsed time of the data transfer is the real time that

elapses between the source sending a packet with the SYN bit enabled

and sending a packet with the ACK bit enabled following the receipt of

a packet(s) with the FIN and ACK bits enabled.

- The winner in each Class will be whichever submitter is judged to

have met all rules and whose results yield the largest value of the

product of the achieved bandwidth (bits/second) multiplied by the sum

of the terrestrial distances between each router location starting from

the source node location and ending at the destination node location,

using the shortest distance of the Earth's circumference measured in

meters between each pair of locations. The contest unit of

measurement is thus bit-meters/second.

- A Contest Entry Judging Panel chosen by Internet2 staff from the

Internet2 community will determine winners in each class according to

the stated rules.

- A winning entry must exceed the previous winning entry by at

least 10%.

- A Contest Rules Panel chosen by Internet2 staff will review,

revise, clarify, and interpret rules as necessary to ensure the spirit

of the competition. Contestants may request published rule

interpretations from the Panel via email.

OS, Interfaces

& Routers Configurations

Workstation oplapro27.cern.ch at CERN

(Switzerland)

#uname -a

Linux oplapro27 2.6.0-test5 #2 SMP Tue Sep 16 13:51:36 CEST 2003 ia64 unknown

#ifconfig eth2

eth2 Link encap:Ethernet HWaddr 00:07:E9:0D:41:59

inet addr:192.168.1.2 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: 2001:1458:e000:100:207:e9ff:fe0d:4159/64 Scope:Global

inet6 addr: fe80::207:e9ff:fe0d:4159/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:8152 Metric:1

RX packets:103460474 errors:117 dropped:226 overruns:113 frame:115

TX packets:286528300 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:10000

RX bytes:83321658503 (79461.7 Mb) TX bytes:1226468782034 (1169651.7 Mb)

Interrupt:65 Base address:0x8000 Memory:c8040000-c8048000

# set mmrbc to 4k reads, modify only Intel 10GbE device IDs

setpci -d 8086:1048 e6.b=2e

# set the MTU (max transmission unit) and the txqueuelen

ifconfig eth2 mtu 8152 txqueuelen 30000 up

# TCP specific settings

net.ipv4.tcp_timestamps = 0 # turns TCP timestamp support off, default 1, reduces CPU use

net.ipv4.tcp_sack = 0 # turn SACK support off, default on

# on systems with a VERY fast bus -> memory interface this is the big gainer

net.ipv4.tcp_rmem = 100000000 100000000 100000000 # sets min/default/max TCP read buffer, default 4096 87380 174760

net.ipv4.tcp_wmem = 100000000 100000000 100000000 # sets min/pressure/max TCP write buffer, default 4096 16384 131072

net.ipv4.tcp_mem = 100000000 100000000 100000000 # sets min/pressure/max TCP buffer space, default 31744 32256 32768

# CORE settings (mostly for socket and UDP effect)

net.core.rmem_max = 409715100 # maximum receive socket buffer size, default 131071

net.core.wmem_max = 409715100 # maximum send socket buffer size, default 131071

net.core.rmem_default = 409715100 # default receive socket buffer size, default 65535

net.core.wmem_default = 409715100 # default send socket buffer size, default 65535

net.core.optmem_max = 409715100 # maximum amount of option memory buffers, default 10240

net.core.netdev_max_backlog = 300000 # number of unprocessed input packets before kernel starts drop ping them, default 300

net.core.rmem_max=409715100

net.core.rmem_default=409715100

Workstation v13chi.datatag.org at Starlight (Chicago - US)

# uname -a

Linux v13chi 2.4.22-xfs #2 SMP Thu Sep 4 05:23:50 PDT 2003 i686 i686 i386 GNU/Linux

# ifconfig eth2

eth2 Link encap:Ethernet HWaddr 00:07:E9:0D:41:45

inet addr:192.168.2.2 Bcast:192.168.2.255 Mask:255.255.255.0

inet6 addr: fe80::207:e9ff:fe0d:4145/64 Scope:Link

inet6 addr: 2001:1458:e000:200:207:e9ff:fe0d:4145/64 Scope:Global

UP BROADCAST RUNNING MULTICAST MTU:8152 Metric:1

RX packets:138896343 errors:32802 dropped:65590 overruns:32795 frame:32795

TX packets:19879068 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:30000

RX bytes:2666061450 (2542.5 Mb) TX bytes:1549457461 (1477.6 Mb)

Interrupt:48 Base address:0x3000 Memory:fb200000-fb208000

# set mmrbc to 4k reads, modify only Intel 10GbE device IDs

setpci -d 8086:1048 e6.b=2e

# set the MTU (max transmission unit) and the txqueuelen

ifconfig eth2 mtu 8152 txqueuelen 30000 up

# TCP specific settings

net.ipv4.tcp_timestamps = 0 # turns TCP timestamp support off, default 1, reduces CPU use

net.ipv4.tcp_sack = 0 # turn SACK support off, default on

# on systems with a VERY fast bus -> memory interface this is the big gainer

net.ipv4.tcp_rmem = 100000000 100000000 100000000 # sets min/default/max TCP read buffer, default 4096 87380 174760

net.ipv4.tcp_wmem = 100000000 100000000 100000000 # sets min/pressure/max TCP write buffer, default 4096 16384 131072

net.ipv4.tcp_mem = 100000000 100000000 100000000 # sets min/pressure/max TCP buffer space, default 31744 32256 32768

# CORE settings (mostly for socket and UDP effect)

net.core.rmem_max = 409715100 # maximum receive socket buffer size, default 131071

net.core.wmem_max = 409715100 # maximum send socket buffer size, default 131071

net.core.rmem_default = 409715100 # default receive socket buffer size, default 65535

net.core.wmem_default = 409715100 # default send socket buffer size, default 65535

net.core.optmem_max = 409715100 # maximum amount of option memory buffers, default 10240

net.core.netdev_max_backlog = 300000 # number of unprocessed input packets before kernel starts drop ping them, default 300

net.core.rmem_max=409715100

net.core.rmem_default=409715100

Procket 8801 at CERN

(Switzerland)

r07gva# sh ver

PRO/1-MSE Procket Modular Service Environment

System Uptime: 10 day(s), 03:00:07

Protocol Uptime: 1 day(s), 06:33:36

System Release Version: 2.3.0.180-B

Build Information: Wed Oct 15 13:43:53 PDT 2003 (sw-build)

Kernel Version: 2.3.0.1-P PowerPC

Total Physical Memory: 112 MB kernel, 1919 MB user

r07gva# sh inventory

PRO/1-MSE Procket Modular Service Environment

System Release Version: 2.3.0.180-B

Build Information: Wed Oct 15 13:43:53 PDT 2003 (sw-build)

Kernel Version: 2.3.0.1-P PowerPC

Inventory:

Item Number Serial No Part Number HW-Ver CLEI code

BP CA8-1-AC-A 01TJ121370 561-0090-000 09 IPM6E00BRA

RP0 RP8-A 0103193829 561-0052-000 32 IP1CPT0HAA

PEM0 PR8-1-4-AC-A 01TJ100935 552-0052-200 05 __________

PEM1 PR8-1-4-AC-A 01TJ100935 552-0052-200 05 __________

FAN0 CT8-1-4-A 01TJ070386 561-0093-002 02 IP1CMR0HAA

SC0 SC8-1-B 01TJ121396 561-0187-000 03 IP1CNSXHAA

LC0 LC8-B 01TR510662 561-0159-000 09 IP1CRUVHAA

MA0/3 M8-10-GEMS-A 0103131818 561-0058-300 01 IP6IPNLDAA

MA0/2 M8-1-192PSS-A 0103234992 561-0056-300 02 IP6IRSLDAA

MA0/1 M8-1-10GESL-A 01TR520749 561-0064-300 01 IP6IPRLDAA

MA0/0 M8-1-10GESL-A 01TR520748 561-0064-300 01 IP6IPRLDAA

r07gva# sh run

Current configuration:

!

! Last Changed: Thu Oct 30 17:15:50 2003

!

version 2.3

!

router-name r07gva

!

ip service telnet

ip service ftp

!

aaa new-model

aaa authentication login default radius local

aaa authentication enable default radius local

aaa authorization exec default radius local

aaa authorization commands 0 default radius local

aaa accounting commands 0 default start-stop radius

service password-encryption

radius-server host 192.91.244.20 key 3 xxxxxxxxxxx

!

logging file messages facility any level debugging

!

interface Loopback0

ip address 192.91.239.249/32

!

interface MgmtEther0

ip address 192.91.244.50/27

!

interface 10GigEther0/0/0

description ----> PC HP

mtu 9178

ip address 192.168.1.1/24

ipv6 address 2001:1458:e000:0100::0007/64

!

interface POS0/2/0

description ----> stm64 to Chicago

clock source internal

pos framing sonet

mtu 9188

ip address 192.65.184.54/30

ipv6 address 2001:1458:e000:0001::0001/126

encapsulation hdlc

!

ip route 0.0.0.0/0 192.91.239.202

ip route 192.91.245.64/27 192.91.238.6

ip route 192.168.2.0/24 192.65.184.53

!

ipv6 route 2001:1458:e000:0200::/64 2001:1458:e000:0001::0002

!

ntp server 192.91.244.10

!

route-context-config management

ip route 0.0.0.0/0 192.91.244.33

!

end

Procket 8801 at CERN

(Switzerland)

r07chi# sh ver

PRO/1-MSE Procket Modular Service

Environment

System

Uptime: 28

day(s), 00:26:49

Protocol

Uptime: 28 day(s),

00:26:22

System Release

Version: 2.2.0.139-P

Build

Information: Wed Aug 6

00:21:36 PDT 2003 (sw-build)

Kernel

Version:

2.2.0.1-P PowerPC

Total Physical

Memory: 112 MB kernel, 1919 MB user

r07chi# sh inventory

PRO/1-MSE Procket Modular Service Environment

System Release Version: 2.2.0.139-P

Build Information: Wed

Aug 6 00:21:36 PDT 2003 (sw-build)

Kernel

Version:

2.2.0.1-P PowerPC

Inventory:

Item

Number Serial

No Part Number HW-Ver CLEI

code

BP

CA8-1-AC-A

0103162967 561-0090-000

09 IPM6E00BRA

RP0

RP8-A

01TJ121299 561-0052-000

32 IP1CPT0HAA

PEM0

PR8-1-4-AC-A

01TJ121387 552-0052-200

05 __________

PEM1

PR8-1-4-AC-A

01TJ121387 552-0052-200

05 __________

FAN0

CT8-1-4-A

0103142030 561-0093-002

02 IP1CMR0HAA

SC0

SC8-1-B

01TJ111046 561-0187-000

03 IP1CNSXHAA

LC0

LC8-B

01TJ090473 561-0159-000

09 IP1CRUVHAA

MA0/3

M8-10-GEMS-A

0103131815 561-0058-300

01 IP6IPNLDAA

MA0/2 M8-1-192PSS-A

0103204014 561-0056-300

01 IP6IRSLDAA

MA0/1 M8-1-10GESL-A

0103204016 561-0064-300

01 IP6IPRLDAA

MA0/0 M8-1-10GESL-A

0103141958 561-0064-300

01 IP6IPRLDAA

r07chi# sh run

Current configuration:

!

! Last Changed: Thu Oct 30

18:19:32 2003

!

version 2.2

!

router-name r07chi

!

ip service telnet

ip service ftp

!

username root allow-shell

privilege 15 password 5 xxxxxxxxxxxx

username paolo allow-shell

privilege 15 password 5 xxxxxxxxxxx

service password-encryption

!

logging file messages facility

any level debugging

!

interface MgmtEther0

ip address 192.91.246.243/26

!

interface 10GigEther0/0/0

description ----> v13chi

mtu 9178

ip address 192.168.2.1/24

ipv6 address

2001:1458:e000:0200::0007/64

!

interface POS0/2/0

description ----> stm64

to CERN

pos framing sonet

mtu 9188

ip address 192.65.184.53/30

ipv6 address

2001:1458:e000:0001::0002/126

encapsulation hdlc

!

!

ip route 192.168.1.0/24

192.65.184.54

!

ipv6 route

2001:1458:e000:0100::/64 2001:1458:e000:0001::0001

!

controllers line-card 0

!

route-context-config management

ip route 0.0.0.0/0

192.91.246.193

!

end

Iperf Outputs

& Command Line Options

10900 seconds test

Server side:

#iperf -i 300 -s -w 120M -m -V -B 2001:1458:e000:200:207:e9ff:fe0d:4145

[ 6] local 2001:1458:e000:200:207:e9ff:fe0d:4145 port 5001

connected with 2001:1458:e000:100:207:e9ff:fe0d:4159 port 32798

[ ID]

Interval

Transfer Bandwidth

[ 6] 0.0-300.0

sec 135 GBytes 3.85 Gbits/sec

[ 6] 300.0-600.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 600.0-900.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 900.0-1200.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 1200.0-1500.0

sec 135 GBytes 3.88 Gbits/sec

[ 6] 1500.0-1800.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 1800.0-2100.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 2100.0-2400.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 2400.0-2700.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 2700.0-3000.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 3000.0-3300.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 3300.0-3600.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 3600.0-3900.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 3900.0-4200.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 4200.0-4500.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 4500.0-4800.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 4800.0-5100.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 5100.0-5400.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 5400.0-5700.0

sec 135 GBytes 3.88 Gbits/sec

[ 6] 5700.0-6000.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 6000.0-6300.0

sec 135 GBytes 3.88 Gbits/sec

[ 6] 6300.0-6600.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 6600.0-6900.0

sec 135 GBytes 3.88 Gbits/sec

[ 6] 6900.0-7200.0

sec 135 GBytes 3.88 Gbits/sec

[ 6] 7200.0-7500.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 7500.0-7800.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 7800.0-8100.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 8100.0-8400.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 8400.0-8700.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 8700.0-9000.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 9000.0-9300.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 9300.0-9600.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 9600.0-9900.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 9900.0-10200.0

sec 135 GBytes 3.88 Gbits/sec

[ 6] 10200.0-10500.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 10500.0-10800.0

sec 135 GBytes 3.87 Gbits/sec

[ 6] 0.0-10882.6

sec 5269033885696 bits 3.87 Gbits/sec

[ 6] MSS size 7168 bytes

(MTU 7208 bytes, unknown interface)

[ 6] Read lengths

occurring in more than 5% of reads:

[ 6] 8192 bytes

read 643192604 times (100%)

Client side:

#iperf -i 60 -f m -m -t 10900

-w 120M -c 2001:1458:e000:200:207:e9ff:fe0d:4145 -V -B

2001:1458:e000:100:207:e9ff:fe0d:4159

------------------------------------------------------------

Client connecting to

2001:1458:e000:200:207:e9ff:fe0d:4145, TCP port 5001

Binding to local address

2001:1458:e000:100:207:e9ff:fe0d:4159

TCP window size: 240

MByte (WARNING: requested 120 MByte)

------------------------------------------------------------

[ 5] local

2001:1458:e000:100:207:e9ff:fe0d:4159 port 32798 connected with

2001:1458:e000:200:207:e9ff:fe0d:4145 port 5001

[...]

[ 5]

0.0-10900.5 sec 5024942 MBytes 3867 Mbits/sec

[ 5] MSS size 8092 bytes

(MTU 8132 bytes, unknown interface)

[ ID]

Interval

Transfer Bandwidth

Note that the mtu here is calculated by iperf presuming a IPv4 header,

and so it misses 20 bytes

60 seconds test for

tcpdump data collection

Server side:

# iperf

-i 10 -s -w 150M -m -V -B 2001:1458:e000:200:207:e9ff:fe0d:4145

------------------------------------------------------------

Server listening on TCP port 5001

Binding to local address

2001:1458:e000:200:207:e9ff:fe0d:4145

TCP window size: 300 MByte

(WARNING: requested 150 MByte)

------------------------------------------------------------

[ 6] local

2001:1458:e000:200:207:e9ff:fe0d:4145 port 5001 connected with

2001:1458:e000:100:207:e9ff:fe0d:4159 port 32841

[ ID]

Interval

Transfer Bandwidth

[ 6] 0.0-10.0

sec 3.74 GBytes 3.21 Gbits/sec

[ 6] 10.0-20.0 sec

4.48 GBytes 3.85 Gbits/sec

[ 6] 20.0-30.0 sec

4.54 GBytes 3.90 Gbits/sec

[ 6] 30.0-40.0 sec

4.45 GBytes 3.83 Gbits/sec

[ 6] 40.0-50.0 sec

4.58 GBytes 3.93 Gbits/sec

[ 6] 50.0-60.0 sec

4.50 GBytes 3.86 Gbits/sec

[ 6] 0.0-60.3

sec 26.5 GBytes 3.78 Gbits/sec

[ 6] MSS size 7168 bytes

(MTU 7208 bytes, unknown interface)

[ 6] Read lengths occurring

in more than 5% of reads:

[ 6] 8192 bytes read

3476375 times (100%)

Client side:

#iperf -i 10 -f m -m -t 60 -w

150M -c 2001:1458:e000:200:207:e9ff:fe0d:4145 -V -B

2001:1458:e000:100:207:e9ff:fe0d:4159

------------------------------------------------------------

Client connecting to

2001:1458:e000:200:207:e9ff:fe0d:4145, TCP port 5001

Binding to local address

2001:1458:e000:100:207:e9ff:fe0d:4159

TCP window size: 300 MByte

(WARNING: requested 150 MByte)

------------------------------------------------------------

[ 5] local

2001:1458:e000:100:207:e9ff:fe0d:4159 port 32841 connected with

2001:1458:e000:200:207:e9ff:fe0d:4145 port 5001

[ ID]

Interval

Transfer Bandwidth

[ 5] 0.0-10.0

sec 4164 MBytes 3493 Mbits/sec

[ 5] 10.0-20.0 sec

4633 MBytes 3886 Mbits/sec

[ 5] 20.0-30.0 sec

4622 MBytes 3877 Mbits/sec

[ 5] 30.0-40.0 sec

4512 MBytes 3785 Mbits/sec

[ 5] 40.0-50.0 sec

4660 MBytes 3909 Mbits/sec

[ 5] 50.0-60.0 sec

4568 MBytes 3832 Mbits/sec

[ 5] 0.0-60.6

sec 27159 MBytes 3762 Mbits/sec

[ 5] MSS size 8092 bytes

(MTU 8132 bytes, unknown interface)

Note that the mtu here is calculated by iperf presuming a IPv4 header,

and so it misses 20 bytes

End-to-End

Traceroute Information

From Geneva (Switzerland) towards Chicago (Illinois/US)

Tracepath from

2001:1458:e000:100:207:e9ff:fe0d:4159

#tracepath6 2001:1458:e000:200:207:e9ff:fe0d:4145

1?:

[LOCALHOST]

pmtu 8152

1:

2001:1458:e000:100::7

3.782ms

2:

2001:1458:e000:1::2

120.301ms

3: 2001:1458:e000:200:207:e9ff:fe0d:4145

117. 77ms reached

Resume: pmtu 8152 hops 3 back 3

back

to the top

Differentiated

Services based QoS

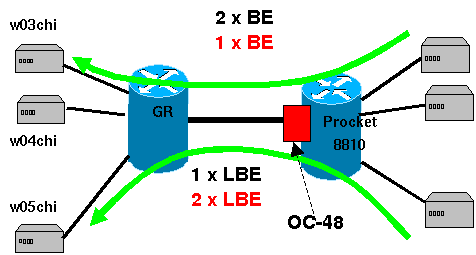

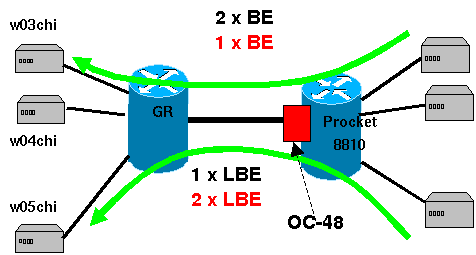

Andrea Di Donato, of the University College of London, took the

opportunity of the PRO/8801 availability to include it in his research

about the current Differentiated Services based QoS implementations.

The test consisted in allocating different proportions of the

bottleneck bandwidth to two different DS classes, namely Best Effort

(BE, DSCP=0) and Less than Best Effort (LBE, DSCP=8). The bandwidth

scheduler algorithms used was Deficit Weighted Round

Robin.

Comments

The PRO/8801 behaviour was almost perfect: the bandwidth allocated to

the different classes was always as expected, both the STM16

and the 1Gbps Ethernet interfaces.

More details in the document

published by UCL.

Configuration

!

qos

class BE

dscp 0

class LBE

dscp 8

service-profile UCL

class BE

class LBE

queuing-discipline dwrr

(BE[X], LBE[Y], default[1])

!

interface output

qos-service UCL

!

O.S. version

r07gva# sh version

PRO/1-MSE Procket Modular Service

Environment

System

Uptime: 57

day(s), 20:07:24

Protocol

Uptime: 48 day(s),

23:40:53

System Release

Version: 2.3.0.180-B

Build

Information: Wed Oct 15 13:43:53

PDT 2003 (sw-build)

Kernel

Version:

2.3.0.1-P PowerPC

Total Physical

Memory: 112 MB kernel, 1919 MB user

back

to the top